Digital tools supporting interpreters in the booth

Computers and tablets

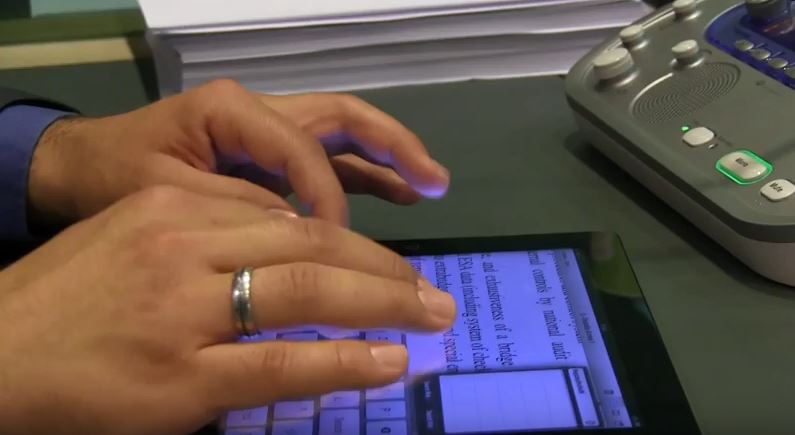

Mobile computers have been used by interpreters for years, including in the booth, thanks to them being portable and lightweight, yet powerful. They provide access to documents, installed software (including dictionaries and other reference tools) and, of course, the internet which enables online research and quick communication.

In recent years, tablet computers have also seen some adoption in interpreter circles. Their advantages for interpreters are obvious: they are small and light, they don't take up much space in the bag or in the booth. Noise - a well-known issue with traditional laptops - is no issue at all since tablets have neither a fan nor a built-in keyboard. Lastly, they are truly mobile devices because of their battery life.

All things considered tablets make a lot of sense for interpreters, both in the booth and on the go. In

short, they can be a reliable tool for managing information.

Making and shaping our tools

Maybe it is because interpreting is a comparatively niche field, but for quite a while now, interpreters have been involved in making and/or shaping their own IT tools, mostly in the field of glossary management. This is understandable: while translators and terminologists are served quite well by software companies, there was nothing that really fitted interpreters’ needs before practitioners took it upon themselves to collaborate with programmers in order to create the necessary tools and make them work for interpreting. Today, there is a considerable variety of software applications focusing on different aspects of interpreting work, from preparation to fast look-up on the job to cutting-edge technologies like speech recognition and term extraction. Some of these specialise in social and collaborative features.

In a similar vein, interpreters are also involved in ongoing work on several commercial remote

interpreting platforms, which, again, focus on different aspects of multilingual communication online.

New technologies, new modes

The use of new technologies has also enabled new ways to approach interpreting, such as Simultaneous Consecutive. SCIC interpreter Michele Ferrari seems to have been the first one to develop this new hybrid mode in the 1990s. He initially accomplished that using a PDA (Personal Digital Assistant) during a press conference by then-Commissioner Neil Kinnock in Italy. The way it works is that the interpreter makes an audio recording of the speech (using a mobile device or an audio-enabled “smart pen”) and while taking just a few notes, e.g. of figures or names. Once the interpreter takes their turn, they listen to the recording and provide what is essentially a simultaneous interpretation. Depending on the technology used, it is possible for the interpreter to speed up or slow down segments of the recording that are redundant or particularly difficult. This technique, which is actively developed, taught and researched by several interpreters, appears to be interesting for settings that are highly demanding in terms of accuracy and completeness.

Recently, another new hybrid mode entitled “Consecutiva a la vista” (“Sight-Consec”) surfaced. This technique also involves taking notes and recording a speech. The recording is simultaneously fed into a speech recognition engine, which enables the interpreter to use the transcript for his consecutive interpretation or to sight-translate directly.

Interpreter training

Lastly, technology has also made inroads in interpreter training. The most significant development may be online speech banks. Initiatives like DG Interpretation’s Speech Repository or various online video channels provide ample material for use in class or in self-directed practice.

Other interpreter initiatives are building on speech banks, creating, for example, an online community where professionals and students can give their time to provide feedback and then get feedback in return. The topic of communities of practice, both online and offline, is of growing interest to trainer-researchers.

Lastly, video-conferencing solutions are used to enhance or even replace presence teaching (“blended learning”). In one case,the entire first year of a Master’s programme for Conference Interpreting takes place online.

The "augmented interpreter"

A key objective of digitalisation is definitely to help interpreters focus on interpreting, not searching for information whilst in the booth.

Demanding tasks in the booth can be further alleviated by the combination of three components:

- Speech recognition would allow to process participants’ speeches into a text (or transcript) in the original language; please join the community on this topic if you are interested, click here

- This transcript would be in turn processed to identify terms, figures and “named entities” in the source language, using terminology extraction tools;

- The equivalent in the interpreter’s active language would be retrieved from glossaries or translated on the fly.